Table of Contents

One of the most powerful and important components of that engine is NGINX (pronounced “engine-ex”). You have almost certainly interacted with it today. It is the high-performance software that powers a massive portion of the world’s busiest websites, including giants like Netflix, Dropbox, and WordPress.com. For web creators, understanding what NGINX is and what it does is no longer just for system administrators. It’s a key piece of technical literacy that helps you understand why your site is fast (or slow) and how to build for scale.

Key Takeaways

- NGINX is More Than a Web Server: While its primary job is serving web pages, NGINX is a versatile tool most prized for its use as a reverse proxy, load balancer, and content cache.

- Built for Speed and Scale: NGINX uses an “event-driven” architecture, which is fundamentally different from older servers. This allows it to handle tens of thousands of simultaneous connections with very low memory usage, making it ideal for high-traffic sites.

- Core Functions: The five main uses for NGINX are: 1) a static web server, 2) a reverse proxy, 3) a load balancer, 4) a content cache, and 5) a media streaming server.

- Performance is Key: NGINX excels at serving static files (images, CSS, JS) and caching dynamic content (like a WordPress page). This dramatically speeds up your site, improves Core Web Vitals, and boosts your SEO.

- Security & Scalability: As a reverse proxy, NGINX acts as a secure gateway, hiding your backend servers and blocking attacks. As a load balancer, it allows your site to scale horizontally by distributing traffic across multiple servers, preventing downtime.

- You May Already Use It: If you use a high-quality managed hosting provider, you are likely already benefiting from a fine-tuned NGINX configuration without having to manage it yourself.

This article will dive deep into what NGINX is, the problems it solves, its most important functions, and why it’s a critical concept for modern web creators to grasp.

The Core Concept: What Is NGINX?

At its simplest, NGINX is a piece of open-source software that acts as a high-performance web server. But that definition barely scratches the surface. It was created specifically to solve the performance and scaling limitations of older web server software.

NGINX Explained: More Than Just a Web Server

When you type a URL into your browser, your browser sends a request to a “web server.” That server’s job is to find the requested files (HTML, CSS, images) and send them back to your browser, which then assembles the webpage.

This is the most basic function of NGINX. It can act as the primary web server for your site. However, its real power lies in its versatility. In many professional setups, NGINX is not the only server. Instead, it sits in front of other servers, acting as an incredibly efficient manager, traffic cop, and security guard.

The “Origin Story”: Solving the C10K Problem

To understand why NGINX is so special, we have to go back to the early 2000s. The internet was growing, and popular websites were starting to serve more and more users at the same time. This led to a famous challenge known as the “C10K problem”: how can a single web server handle ten thousand simultaneous connections?

At the time, the most popular web server was (and still is) Apache. Apache’s traditional architecture was process-driven. This means that to handle a new connection (a new visitor), it would often create a new “process” or “thread” on the server. This is a very robust and flexible model, but it has a key weakness: each of those processes takes up a chunk of server memory (RAM).

If you have 10,000 visitors, you could have 10,000 active processes, which would consume a massive amount of RAM and bring the server to its knees.

In 2002, a Russian software engineer named Igor Sysoev started working on a solution. His goal was to create software that was built from the ground up for high concurrency and low resource usage. He released NGINX in 2004.

The NGINX vs. Apache Showdown: A Tale of Two Architectures

This brings us to the most common comparison in the web server world: NGINX vs. Apache. As an Elementor Writer, I must stress that this is not about one being “better” in all cases. They are different tools with different architectural strengths, and in fact, they are often used together.

Apache’s Process-Driven Model

As we touched on, Apache has historically used a process-per-connection model (or a more modern thread-per-connection model).

- Factual Pros: Apache is incredibly flexible. Its .htaccess file system is famous among WordPress developers. It allows you to override server settings (like redirects or security rules) on a per-directory basis, which is very convenient. It has a massive library of modules and has been the backbone of the web for decades.

- Factual Cons: The process-driven model can be resource-intensive under heavy traffic. It’s generally considered slower than NGINX at serving static files (images, CSS, JS).

NGINX’s Event-Driven Architecture (The “Secret Sauce”)

NGINX’s design is completely different. It uses an asynchronous, event-driven architecture.

Here is what that means in plain English: Instead of creating a new process for every visitor, NGINX has a small, fixed number of “worker processes” (often just one per CPU core). A single worker process can handle thousands of connections at the same time.

It does this by working asynchronously. It doesn’t wait for one request to finish before starting the next. It listens for “events,” like a new connection arriving, a client sending data, or a backend server finishing a task. It quickly processes that event (e.g., grabs the data, sends it off) and then immediately moves to the next event in the queue.

This model is non-blocking. The worker is never “stuck” waiting for a slow disk or a slow network. It’s always busy processing the next available task.

- Factual Pros: This architecture is incredibly lightweight and efficient. It uses very little RAM, even with tens of thousands of connections. It is exceptionally fast at its core tasks, especially serving static files.

- Factual Cons: Configuration can be more abstract for beginners. There is no equivalent to .htaccess files. All configuration changes must be made in the central configuration files and the server reloaded, which is less flexible for shared hosting environments.

Is One “Better”? (The Neutral Conclusion)

No. They are both excellent, powerful tools. In fact, one of the most popular and robust web stacks for many years was to use both together.

In this setup, NGINX is placed “in front” of Apache.

- NGINX faces the public internet and handles all incoming requests.

- It uses its high-performance model to serve all the “easy” stuff: static files like images, CSS, and JavaScript.

- When a request comes in for dynamic content (like a WordPress PHP page), NGINX “proxies” that request to Apache, which is running on the backend.

- Apache does its job (processing the PHP, running its .htaccess rules) and sends the result back to NGINX, which then passes it to the user.

This “best of both worlds” approach has largely been replaced in modern stacks, where NGINX is now most commonly paired with PHP-FPM (FastCGI Process Manager) to handle dynamic PHP content directly. This combination is the high-performance standard for most modern WordPress hosting.

What Is NGINX Used For? The 5 Core Roles

NGINX is a jack-of-all-trades. Understanding its different roles is the key to seeing its true power. Here are its five primary uses, from simplest to most complex.

1. High-Performance Web Server

This is its most basic function. You can point NGINX at a folder of files, and it will serve them to the internet.

Excelling at Static Content

Where NGINX truly shines as a web server is in serving static content. These are files that do not change, like images, videos, CSS files, and JavaScript files.

Because of its event-driven model, NGINX can read these files from the server’s disk and send them to thousands of users at once with blistering speed and minimal resource cost. A typical WordPress site might have dozens of assets (images, CSS files, JS files) per page. Having NGINX serve these files quickly is a foundational element of a fast-loading website. This is a crucial first step in optimizing for Google’s Core Web Vitals.

2. Reverse Proxy (The “Traffic Cop”)

This is arguably the most common and powerful use for NGINX. This concept can be tricky, so let’s use a clear analogy.

- A Forward Proxy is what you might use inside a company. It’s a server that sits between you and the public internet. When you (your browser) want to visit a website, your request goes to the proxy, and the proxy “forwards” it for you. This is used for filtering, security, and hiding the identity of clients.

- A Reverse Proxy is the opposite. It’s a server that sits between the public internet and your web servers. All visitors to your website talk directly to the reverse proxy. The reverse proxy then “forwards” those requests to one or more “backend” servers to do the actual work.

Think of a reverse proxy like the central receptionist for a large, secure building.

- All visitors (traffic) must go to the front desk (the NGINX reverse proxy).

- Visitors do not know (or need to know) the internal layout of the building or the direct phone number of the employee they want to see. They just know the building’s main address.

- The receptionist (NGINX) takes the request (e.g., “I’m here to see the billing department”).

- The receptionist finds the correct internal employee (a backend server) and passes the request on.

- The employee (backend server) gives the answer back to the receptionist (NGINX), who then hands it to the visitor.

Key Benefits of a Reverse Proxy

Why do this? This setup provides enormous benefits for performance, security, and scalability.

- Anonymity & Security: Your backend servers (where your actual website code and database live) are completely hidden from the public internet. Their IP addresses are not exposed. This dramatically reduces the “attack surface” for hackers. The reverse proxy is the only thing they can see.

- SSL/TLS Termination: Encrypting and decrypting HTTPS (SSL/TLS) traffic is computationally expensive. You can let the NGINX reverse proxy do all this heavy lifting. It “terminates” the SSL connection, talking securely to the outside world. It can then pass the request to the backend servers over a fast, simple, unencrypted internal network. This frees up your backend servers to focus 100% on building the web pages.

- Compression: NGINX can compress website assets (using gzip or brotli) on the fly before sending them to the user, speeding up transfer times without adding any load to the backend application servers.

- Load Balancing: This is the next big use case, and it’s a direct feature of being a reverse proxy.

3. Load Balancer (The “Distributor”)

What happens when your website gets so popular that a single backend server is not enough to handle the traffic? You need to “scale out” by adding more servers. But how do your visitors know which server to talk to?

They don’t. They just talk to the NGINX reverse proxy, which now also acts as a load balancer.

A load balancer’s job is simple: it distributes incoming traffic across a “pool” of identical backend servers. This is one of the most fundamental techniques for building a high-availability, scalable web application.

Why Load Balancing is Critical for Scalability

- High Availability & Redundancy: Let’s say you have three backend servers. If one of them crashes, NGINX will detect that it’s “unhealthy” and immediately stop sending traffic to it. It will seamlessly distribute the traffic to the two remaining healthy servers. Your users will experience zero downtime.

- Horizontal Scaling: Your traffic is surging for a Black Friday sale. Your two servers are at 90% capacity. No problem. You simply “spin up” three more identical servers, add their internal IP addresses to the NGINX configuration, and—poof—you now have five servers sharing the load. This is called horizontal scaling, and it’s how all major websites handle massive traffic.

Common Load Balancing Algorithms

NGINX is smart about how it distributes traffic. It doesn’t just pick at random. A few common methods (called “directives” in the config) include:

- Round Robin: This is the simple default. It sends requests to the servers in a list, one after another (Server 1, Server 2, Server 3, Server 1, Server 2…).

- Least Connections: This is often the most efficient. NGINX tracks how many active connections each backend server has and sends the new request to the server with the fewest connections.

- IP Hash: This method takes the visitor’s IP address and runs a “hash” function on it to ensure that a specific user is always sent to the same backend server. This is important if your application stores user session information (like a shopping cart) on the local server.

4. Content Caching (The “Memory”)

This is another huge performance feature, especially for dynamic sites like those built on WordPress or other content management systems.

Generating a WordPress page is “expensive.” It requires running PHP code, making multiple queries to a MySQL database, and assembling the final HTML page. This can take hundreds of milliseconds or even a full second. It’s a lot of work for the backend server.

A cache is a place to store the result of that work.

How NGINX Caching Works

You can configure NGINX (acting as a reverse proxy) to cache the final HTML response from the backend.

- First Visitor: A user requests yoursite.com/blog-post.

- NGINX passes the request to your WordPress server (backend).

- WordPress builds the page (runs PHP, queries database) and sends the 100KB HTML file back to NGINX.

- NGINX does two things: a. It sends the 100KB HTML file to the visitor. b. It saves a copy of that HTML file to its local disk, marked with a “key” (like yoursite.com/blog-post) and a “time-to-live” (TTL), for example, 10 minutes.

- Next 1,000 Visitors (within 10 minutes):

- All 1,000 users request yoursite.com/blog-post.

- NGINX sees the request, checks its cache, finds the file, and serves the 100KB HTML file directly from its memory or disk.

- The request never even touches your WordPress server. The PHP code never runs. The database is never queried.

The difference in speed is staggering. Serving from the NGINX cache can take 2-10 milliseconds, versus the 200-1000+ milliseconds it would take to generate the page new. This is the single most effective way to speed up a dynamic website and allow it to handle viral traffic.

This is also a core feature of plugins like the Elementor Image Optimizer, which not only compresses images but also ensures they are served via a fast CDN, which is a global, distributed version of a cache.

Microcaching

A very powerful advanced technique is “microcaching.” This is where you tell NGINX to cache dynamic content for a very short time, like one to five seconds.

This sounds too short to be useful, but it’s incredibly effective for high-traffic sites. If your site gets 1,000 requests per second, caching a page for just one second means that 999 of those requests are served from the cache, and only one request hits your backend. It’s a simple change that can absorb a massive “thundering herd” of traffic.

5. Media Streaming

NGINX is also very good at serving video and audio files. It has specific modules that can handle streaming protocols like HLS (HTTP Live Streaming) and DASH. It also handles “pseudo-streaming” very well. This is where a user can “seek” to a part of a large MP4 video file that hasn’t been downloaded yet. NGINX can handle these “byte-range” requests efficiently, making it a great choice for self-hosting video content.

How NGINX Configuration Works: A Look Under the Hood

Unlike the user-friendly interfaces of Elementor, NGINX is configured entirely through plain text files. While this is powerful, it’s also what gives it a steep learning curve. The main configuration file is typically located at /etc/nginx/nginx.conf.

This section gets technical, but understanding the logic is valuable for any web creator.

The nginx.conf File: The Heart of the Operation

The configuration file is structured using a system of directives and blocks (also called “contexts”).

- Directives: These are the actual commands. They are simple key-value pairs that end with a semicolon.

- worker_processes 4; (Tells NGINX to start 4 worker processes)

- root /var/www/html; (Sets the website’s root folder)

- Blocks (Contexts): These are containers for directives, defined by curly braces {…}. They group settings that apply to a specific “context,” like all HTTP traffic, or a specific website.

The structure is hierarchical:

- Main (Global) Block: The top-level file itself. Directives here affect the entire server (like worker_processes or the user NGINX runs as).

- http Block: This block contains all directives for web traffic. You’ll set global web settings here, like cache paths and log formats.

- server Block: This is the most important block. Each server block defines one “virtual host” (i.e., one website). You can have many server blocks inside your http block. This is where you define which domain names to listen for (server_name) and what port to listen on (listen).

- location Block: This is the most powerful part. Inside a server block, you use location blocks to tell NGINX how to handle requests for different URLs or file types.

Practical Example 1: A Basic Static Site server Block

Here is a fully-commented example of a simple server block that serves a basic HTML website. This would typically go in a file under /etc/nginx/sites-available/example.com.conf.

# Define the “server” or virtual host

server {

# Listen on port 80 (standard HTTP traffic)

listen 80;

# Listen on port 443 for secure (HTTPS) traffic

# This assumes you have SSL certificates set up

listen 443 ssl;

# Define the domain name(s) this server block responds to

server_name example.com [www.example.com](https://www.example.com);

# Set the “document root”

# This is the folder on the server where the website’s files are

root /var/www/[example.com/html](https://example.com/html);

# Define the default file(s) to look for

# If a user requests a directory, NGINX will look for these files in order

index index.html index.htm;

# — Location Blocks Start Here —

# This is the main “catch-all” location block for / (every request)

location / {

# This directive tells NGINX how to find the file

# 1. $uri: Try the file exactly as requested (e.g., /about.html)

# 2. $uri/: If the request is a directory, look for an index file (e.g., /about/index.html)

# 3. =404: If nothing is found, return a 404 Not Found error.

try_files $uri $uri/ =404;

}

# An example location block for a specific file path

location /admin {

# You could add security here, like

# allow 192.168.1.100; # Allow your IP

# deny all; # Block everyone else

}

# An example location block using a Regular Expression (regex)

# This block matches any request ending in .css or .js

location ~* \.(css|js)$ {

# Set a browser cache header for 1 year

# This tells the user’s browser to cache these files

expires 1y;

log_not_found off;

}

}

Practical Example 2: NGINX as a Reverse Proxy for WordPress

This is a more advanced and realistic example. Here, NGINX will serve static files directly but will pass all PHP requests to a separate “PHP-FPM” service, which is the standard way to run PHP with NGINX.

If you want to see a walkthrough of a basic NGINX installation, this video is a good starting point for a “do-it-yourself” setup:

Here is the configuration:

server {

listen 80;

server_name wordpress-example.com;

# Set the root directory for WordPress

root /var/www/wordpress-example.com;

# The default index file is now index.php

index index.php;

# This is the main location block for WordPress

location / {

# This directive makes WordPress “pretty permalinks” work

# It tries to find the file/directory. If not found,

# it passes the request to /index.php with the arguments.

try_files $uri $uri/ /index.php?$args;

}

# This location block handles all static files

# It tells NGINX to serve them directly and cache them aggressively

location ~* \.(css|js|jpg|jpeg|png|gif|ico|svg|webp)$ {

expires 1y;

log_not_found off;

add_header Cache-Control “public”;

}

# This is the most important part for dynamic content

# This location block matches ANY request that ends in .php

location ~ \.php$ {

# — This is the reverse proxy part —

# Include the standard FastCGI parameters

include snippets/fastcgi-php.conf;

# This is the “pass” directive. It “proxies” the request.

# It sends the request to the PHP-FPM service,

# which is listening on a “socket” file.

fastcgi_pass unix:/var/run/php/php8.2-fpm.sock;

# This could also be a network address like:

# fastcgi_pass 127.0.0.1:9000;

}

# A security rule: deny access to .htaccess files

# Even though NGINX doesn’t use them, this prevents clients from reading them

location ~ /\.ht {

deny all;

}

}

This configuration shows the power and flexibility of NGINX. It’s configured to do two jobs at once: act as a fast static web server and act as a reverse proxy for all dynamic PHP requests.

Why Should a Web Creator Care About NGINX?

This all seems very technical. Why should a designer or a freelance creator care about any of this?

Because the server is the foundation of the house you are building. A beautiful website on a slow, unstable foundation will provide a terrible user experience. Understanding NGINX helps you understand the why behind website performance.

The Performance Impact: Speed, Speed, Speed

As we’ve covered, NGINX is fundamentally faster at the core job of serving files and handling connections.

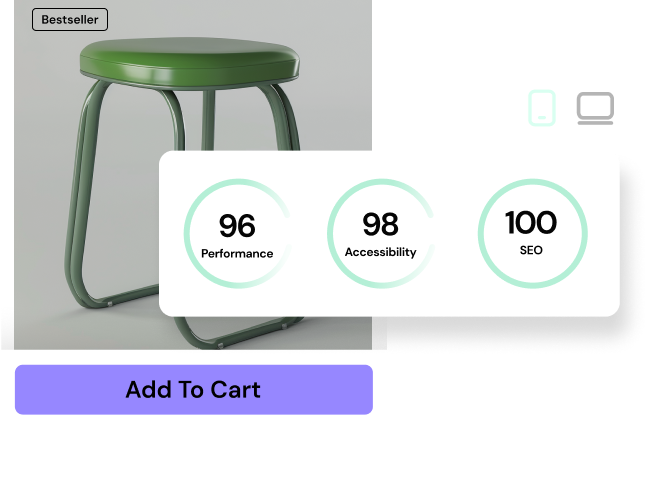

- Better Core Web Vitals: A fast NGINX server with caching will dramatically improve your Time to First Byte (TTFB), First Contentful Paint (FCP), and Largest Contentful Paint (LCP). Your site feels faster.

- Lower Bounce Rates: A faster site means users are less likely to click the “back” button.

- Better SEO: Google has explicitly used site speed and Core Web Vitals as a ranking factor. A fast server foundation is a prerequisite for good SEO.

The Scalability Impact: Handling Viral Traffic

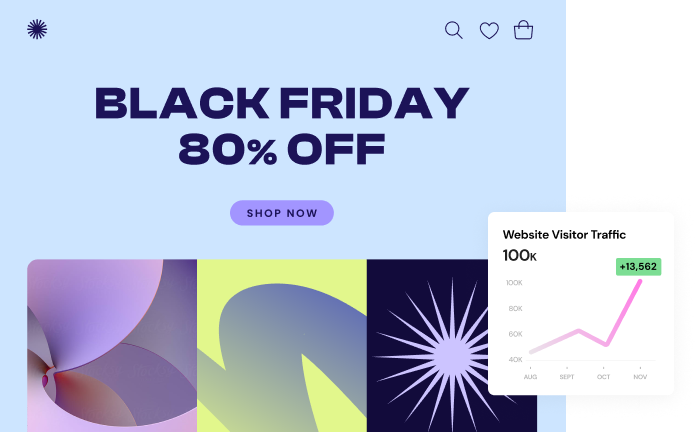

Imagine you build a site for a client. You use the Elementor Pro builder to create a stunning WooCommerce store. The client launches a new product, and it goes viral.

Can their site handle 10,000 visitors in the next hour?

- Without NGINX Caching/Load Balancing: The site will likely crash. The database will be overloaded with queries, and the PHP processor will run out of workers. This is a disaster for the client.

- With an NGINX Reverse Proxy: NGINX stands at the front gate. Its cache serves the static product page to 9,900 of those visitors. Only a few requests “get through” to the backend to process “add to cart” actions. The site stays online, and the client makes thousands of sales.

This is the power of a modern server stack. It allows your creations to scale.

The Security Impact: A Hardened Front Line

Using NGINX as a reverse proxy is a massive security upgrade.

- Blocks Malicious Traffic: It can be configured with a Web Application Firewall (WAF) to filter out common attacks (like SQL injection or cross-site scripting) before they ever reach your WordPress application.

- Rate Limiting: You can configure NGINX to “rate limit” requests to specific pages. For example, you can say “an single IP address can only try to POST to wp-login.php 10 times per minute.” This instantly shuts down 99% of all brute-force login attacks.

The “Managed” Approach: NGINX Without the Headache

Reading the configuration examples above, you might be thinking, “This is way too complicated. I’m a creator, not a system administrator.”

You are correct.

The Challenge of DIY Server Management

Configuring, tuning, and securing a high-performance NGINX server for WordPress is a highly specialized skill. It is not something most web creators want to do. It requires deep knowledge of Linux, networking, security, and PHP. It’s a full-time job.

“As web creators, our value is in the ‘what’—the design, the user experience, the strategy,” says web creation expert Itamar Haim. “We shouldn’t have to be experts in the ‘how’ of server infrastructure. A managed platform that handles the complex ‘how’—like a fine-tuned NGINX configuration, security, and caching—lets us focus on what we do best: creating value for our clients.”

This is why the market has shifted so dramatically toward Managed Hosting Platforms.

The Rise of Managed Hosting Platforms

A managed hosting provider essentially sells you the benefits of a complex server stack without the headache. When you sign up for a high-quality managed host, you are not just getting a blank server. You are getting a pre-configured, finely-tuned web creation platform.

Here is what “managed” means in the context of NGINX:

- Optimized Stack: The provider has already configured NGINX (or a similar high-performance server like LiteSpeed) to work perfectly with WordPress. They have tuned the fastcgi_pass parameters, set up the static file handling, and optimized all the worker processes.

- Server-Side Caching: The host implements a robust caching layer (often using NGINX’s fastcgi_cache or a tool like Varnish) for you. You do not need to write a location block. You just see a “Purge Cache” button in your WordPress dashboard.

- Security Included: They manage the Web Application Firewall (WAF) and DDoS protection at the NGINX/proxy level. You do not need to configure deny rules.

- Unified Support: If your site is slow, there is no “blame game” where the plugin developer blames the host. The hosting provider is responsible for the entire stack, from the server to the CMS.

This video on Elementor’s managed hosting solution explains this “all-in-one” platform concept well:

The Elementor Hosting Example: A Complete Platform

This “all-in-one” approach is the entire philosophy behind Elementor Hosting. It’s an example of a complete platform that bundles all these complex pieces together.

Factually, this platform is built on the Google Cloud Platform, providing a highly scalable and reliable infrastructure. It integrates a CDN (Cloudflare Enterprise), which is a global network of NGINX-like proxy servers that cache your content in data centers all over theworld. It also bundles all the security (WAF, DDoS protection) and caching layers.

The point is this: for a creator using the Elementor platform, this managed solution means they get all the benefits of a high-performance NGINX-powered stack (speed, scalability, security) without ever having to edit a configuration file.

This frees up the creator to focus on what matters: planning the site (perhaps using the AI Site Planner), generating content (using tools like Elementor AI), and building the actual user experience.

Whether you are building a simple blog with a free theme, a complex eCommerce (WooCommerce) site, or managing dozens of client sites, the “managed” approach handles the NGINX layer for you, letting you build on a foundation you know is fast and secure.

The Future of NGINX

NGINX is not standing still. In 2019, NGINX Inc. was acquired by F5 Networks, a major enterprise networking and security company. This has accelerated its development, particularly for its commercial product, NGINX Plus.

NGINX Plus adds more advanced, enterprise-grade features on top of the open-source version, such as more sophisticated load balancing algorithms, live activity monitoring dashboards, and dedicated support.

The NGINX team has also been developing NGINX Unit, a newer project that is a multi-language application server. It aims to simplify modern applications even further by acting as a web server and application server all in one.

NGINX remains one of the most critical and dominant technologies in the modern web stack. Its role in powering microservices, APIs, and containerized applications (like Docker) means it will be a foundational piece of the internet for many years to come.

Conclusion: Your Role in a High-Performance Web

As a web creator, you may never open the nginx.conf file. But you now understand what it does.

You know that NGINX is a lightweight, event-driven powerhouse that excels at handling high traffic. You know it’s not just a web server but also a reverse proxy, a load balancer, and a caching engine.

You can now ask intelligent questions of your hosting provider:

- “What web server are you using? Is it NGINX or LiteSpeed?”

- “Are you using NGINX as a reverse proxy in front of Apache?”

- “What kind of server-side caching do you offer? Is it NGINX FastCGI caching or something else?”

Understanding these concepts makes you a more knowledgeable and capable professional. You can better diagnose performance problems and, most importantly, make better decisions about the technology you choose to build on.

Whether you decide to become a “do-it-yourself” server admin or (more likely) choose a premium managed platform like Elementor Hosting that abstracts all that complexity away, you now understand the high-performance foundation that is non-negotiable for a fast, secure, and scalable website. Your clients, and their users, will thank you for it.

Frequently Asked Questions (FAQ) About NGINX

1. What does NGINX stand for? NGINX is a stylized spelling of “Engine X.” It represents the idea of a powerful “engine” for the web.

2. Is NGINX better than Apache? Neither is universally “better.” NGINX is factually faster at serving static content and uses far less memory under high traffic due to its event-driven architecture. Apache is known for its extreme flexibility, powerful module system, and easy-to-use .htaccess files. For high-performance WordPress sites, a stack using NGINX (or a similar server like LiteSpeed) is generally preferred.

3. Is NGINX free? Yes. NGINX is and always has been open-source and free to use. There is also a commercial (paid) version called NGINX Plus that adds more features, dashboards, and enterprise-level support.

4. Does NGINX replace Apache? It can. Many modern web stacks use NGINX exclusively, passing dynamic requests to a PHP-FPM processor. However, NGINX can also work with Apache, acting as a reverse proxy in front of it. In this setup, NGINX handles static files and “terminates” SSL, while Apache handles the dynamic application logic.

5. Does WordPress work with NGINX? Yes, WordPress works exceptionally well with NGINX. It is the web server of choice for most high-performance, high-traffic WordPress sites. It requires a specific configuration (as shown in the example above) to handle WordPress’s “pretty permalinks” and to pass PHP files to a PHP-FPM processor.

6. What is a reverse proxy in simple terms? A reverse proxy is like a receptionist at a front desk. All website visitors talk to the receptionist (NGINX), who then passes their requests to the right employee (backend server) and returns the answer. This improves security (by hiding the employees) and performance (the receptionist can handle common questions from memory, which is caching).

7. What is load balancing? Load balancing is the act of distributing traffic across multiple servers. If you have one NGINX server and three identical web servers, the NGINX load balancer will “balance” the visitors among all three, preventing any one server from being overloaded. This is essential for scalability and preventing downtime.

8. What is NGINX used for in simple terms? In simple terms, NGINX is used to make websites fast, secure, and reliable. It does this by acting as a high-speed web server, a protective reverse proxy, a traffic-distributing load balancer, and an intelligent content cache.

9. Do I need to know NGINX if I use a managed WordPress host? You do not need to know how to configure NGINX. Your managed host (like Elementor Hosting) does all that for you. However, understanding what NGINX is and what it does (like caching and reverse proxying) helps you understand why your site is fast and how to best work with your host’s platform.

10. What is NGINX Plus? NGINX Plus is the paid, commercial product from F5 (the company that owns NGINX). It includes the free NGINX core plus advanced features for enterprises, such_as more powerful load balancing, a live activity monitoring dashboard, and 24/7 expert support.

Looking for fresh content?

By entering your email, you agree to receive Elementor emails, including marketing emails,

and agree to our Terms & Conditions and Privacy Policy.