Table of Contents

Using this directive correctly is a routine part of good technical SEO. Using it incorrectly can make your entire website invisible to search engines, tanking your traffic and revenue overnight. This guide will walk you through every aspect of the Disallow directive, from its basic syntax to the critical strategic mistakes that even experienced developers sometimes make. We will cover how to use it, when to use it, and when you absolutely must use something else.

Key Takeaways

- Disallow Blocks Crawling, Not Indexing: The Disallow directive tells a search engine crawler “do not access this file or folder.” It does not reliably remove a page from Google’s search results if it’s already indexed.

- The noindex Tag is for Hiding Pages: To properly remove a page from search results, you must use the <meta name=”robots” content=”noindex”> tag. You must Allow crawling for Google to see this noindex tag.

- Never Block CSS or JavaScript: Blocking resource files like CSS and JS prevents Google from rendering your page correctly. This signals a poor user experience and will severely harm your rankings.

- Disallow is for Crawl Budget: The best use for Disallow is to block access to unimportant areas (like admin panels, internal search results, or cart pages) to save your “crawl budget” for your important content.

- Location is Key: The robots.txt file must be a plain text file located in the root directory of your domain (e.g., example.com/robots.txt).

- Test Everything: Always use the Google Search Console’s URL Inspection tool or Robots.txt Tester to verify your rules before and after you make them live.

What is a Robots.txt File? The Foundation of Crawler Management

Before we can master the Disallow command, we need to understand the file where it lives.

The “Doorman” Analogy Explained

Think of your website as a large office building. You have public lobbies, private offices, meeting rooms, and utility closets. Search engine crawlers (or “bots”) are like visitors who want to create a public map of your building.

The robots.txt file is the instruction sheet you hand this visitor at the front desk. It’s the doorman pointing things out:

- “You can visit all the public lobbies.”

- “Please do not go into the ‘Admin’ area.”

- “The ‘Utility Closets’ are off-limits.”

The bot, if it’s a “good” bot like Googlebot or Bingbot, will read this sheet and politely follow your rules.

Where is the Robots.txt File Located?

A robots.txt file has a very specific and non-negotiable location: the root directory of your website.

You can find it by typing your domain and adding /robots.txt to the end.

- https://elementor.com/robots.txt

- https://google.com/robots.txt

- https://your-domain.com/robots.txt

If the file is placed anywhere else (like your-domain.com/blog/robots.txt), search engines will not find it and will assume you do not have one. It must also be a plain text file, not a Word doc or HTML page.

What is the Robots Exclusion Protocol (REP)?

Robots.txt is not a law. It’s an informal standard known as the Robots Exclusion Protocol (REP). This protocol was created in 1994 by Martijn Koster to prevent a web crawler he built from overwhelming servers.

Because it’s a protocol and not a firewall, it relies on the cooperation of the bot.

- Good Bots (like Googlebot, Bingbot) will almost always follow the rules you set. It’s in their best interest to respect your wishes and not waste resources crawling pages you’ve marked as off-limits.

- Malicious Bots (like spambots, email harvesters) will completely ignore your robots.txt file. In fact, some look at your robots.txt file specifically to find the locations you don’t want them to see, like /admin or /private.

This is a critical distinction: robots.txt provides crawl guidance, not security.

How Search Engines Find and Read Your Robots.txt

The process is simple and automated. When a search engine crawler first visits your site, the very first file it looks for is robots.txt.

- Request: Googlebot arrives at your-domain.com.

- First Stop: Before it requests any other page, it requests your-domain.com/robots.txt.

- Read and Cache: If the file is found (a 200 OK status), Googlebot reads the rules inside. It then caches these rules, usually for up to 24 hours. This means if you make a change, Googlebot might not see that change until its next scheduled cache refresh.

- Crawl: Googlebot proceeds to crawl your site, following the rules it just learned.

- No File?: If the file is not found (a 404 error), Googlebot assumes you have no rules and proceeds to crawl every page it can find. This is generally fine but not ideal.

What is a “User-Agent”?

Inside the robots.txt file, rules are grouped by “user-agent.” A user-agent is simply the name of the bot.

The file is a list of User-agent groups, each with its own set of Disallow (or Allow) rules.

- User-agent: * This is the most common user-agent. The asterisk (*) is a wildcard that means “this rule applies to all bots.”

- User-agent: Googlebot This rule applies only to Google’s main crawler.

- User-agent: Bingbot This rule applies only to Microsoft’s Bing crawler.

You can have separate rules for different bots. For example, you might want Google to crawl everything but block an aggressive SEO analytics bot that is using too many server resources.

# Block AhrefsBot from the whole site

User-agent: AhrefsBot

Disallow: /

# Let all other bots crawl

User-agent: *

Disallow:

The Core Directive: Understanding Disallow

Now we get to the main command. The Disallow directive tells a user-agent what it should not crawl. The syntax is simple: Disallow: [path].

The [path] is the part of the URL that comes after your domain.

The Basic Syntax

Let’s look at the three most basic Disallow rules.

1. Block the Entire Site (DANGER!)

User-agent: *

Disallow: /

The single / character represents the root of your site. This rule tells all bots, “Do not crawl anything on this entire website.” This is the setting WordPress uses when you check the “Discourage search engines from indexing this site” box. It’s useful for a brand-new site in development, but it’s catastrophic if you leave this on after launch.

2. Allow the Entire Site

User-agent: *

Disallow:

By leaving the Disallow directive blank, you are telling all bots, “You are allowed to crawl everything.” This is technically the same as having no robots.txt file at all, but it is more explicit. It’s a good default file to have.

3. Block a Specific Folder

User-agent: *

Disallow: /admin/

Disallow: /private-files/

This tells all bots not to crawl any URL that begins with /admin/ or /private-files/. The trailing slash is important.

How Disallow Handles Paths (Case Sensitivity and Specificity)

This is where people make mistakes.

- It’s Case-Sensitive: Disallow: /Page/ is different from Disallow: /page/. If you want to block both, you need two separate rules.

- It’s “Starts-With” Matching: A Disallow value matches any URL path that starts with that value.

- Disallow: /p

- This rule will block:

- /page.html

- /profile/

- /private/folder/document.pdf

- /p

Because of this, it’s safer to use a trailing slash for folders (Disallow: /p/) to avoid accidentally blocking files like page.html.

How to Use Disallow to Block a Single File

If you want to block just one page, you list its full path.

User-agent: *

Disallow: /my-secret-page.html

Disallow: /internal-company-report-q3.pdf

This is a very specific and safe rule. It will not block any other page.

Advanced Syntax: Pattern Matching with Disallow and Allow

The Robots Exclusion Protocol has evolved to include two special characters for more complex rules: the wildcard (*) and the end-of-string anchor ($). Note: Not all crawlers support these, but Google and Bing do.

Using the Wildcard (*)

The * acts as a placeholder for “any sequence of characters.”

Blocking all files of a certain type:

User-agent: *

Disallow: /*.pdf

This rule blocks any URL that has .pdf in it.

- BLOCKED: /my-file.pdf

- BLOCKED: /folder/another-file.pdf

- BLOCKED: /this-is-a-file.pdf-but-not-really.html (Be careful!)

Blocking all query parameters: Query parameters are the parts of a URL after a ? symbol, often used for tracking or filtering.

User-agent: *

Disallow: /*?

This rule blocks any URL that contains a question mark. This is a very common way to block faceted navigation and tracking URLs that can create duplicate content.

- BLOCKED: /shop?color=blue

- BLOCKED: /blog?source=email

Using the End-of-String Anchor ($)

The $ character signifies the end of the URL. This makes your rule much more specific.

Let’s revisit our PDF example. Disallow: /*.pdf was too broad. Disallow: /*.pdf$ is much better.

This rule says “Block any URL that ends with .pdf.”

- BLOCKED: /my-file.pdf

- BLOCKED: /folder/another-file.pdf

- ALLOWED: /this-is-a-file.pdf-but-not-really.html

The Allow Directive: Creating Exceptions

The Allow directive does the opposite of Disallow: it explicitly permits crawling. Its power comes from its ability to override a Disallow rule.

This is perfect for blocking an entire folder except for one specific file.

User-agent: *

Disallow: /wp-admin/

Allow: /wp-admin/admin-ajax.php

This is the default rule in WordPress and is a perfect example.

- Disallow: /wp-admin/ blocks the entire admin folder.

- Allow: /wp-admin/admin-ajax.php punches a hole in that rule, allowing bots to access the admin-ajax.php file, which is necessary for some front-end site functions to work properly.

Google and Bing use a “longest match” rule. They will apply the most specific rule (the one with the most characters) that matches a given URL.

The Billion-Dollar Mistake: Disallow vs. noindex

This is the most important concept in this entire guide. Using the wrong tool for the job can be catastrophic for your SEO.

- Disallow = “Do not crawl this page.” (Blocks the bot)

- noindex = “Do not index this page.” (Blocks the page from search results)

What Disallow Actually Does (Blocks Crawling)

When you Disallow a URL, you tell Googlebot, “Do not open this door.”

But what if Google already knows about the page from other sources, like a backlink from another website?

In this case, Google knows the page exists but is not allowed to look at it. The page may stay in the index. You will see this dreaded message in Google search results:

“A description for this result is not available because of this site’s robots.txt.”

This is the worst of both worlds. The page is still in Google, but it looks broken and unprofessional, and no one will click it.

What noindex Actually Does (Blocks Indexing)

The noindex directive is a meta tag you place in the <head> section of your HTML page. <meta name=”robots” content=”noindex, follow”>

This tag tells Googlebot, “You are welcome to open this door and read this page. However, you are not allowed to show this page on your public map (the search results).”

This is the correct, reliable, and only way to remove a page from Google’s index.

The noindex and Disallow Conflict (The “Catch-22”)

Here is the trap many people fall into. They have a page they want to remove from Google, so they do both:

- They add <meta name=”robots” content=”noindex”> to the page.

- They add Disallow: /my-page/ to robots.txt.

What happens? Googlebot arrives. It reads robots.txt first. It sees Disallow: /my-page/ and obeys. It never crawls the page. Because it never crawls the page, it never sees the noindex tag. The page remains indexed forever.

As web expert Itamar Haim often states, “Using Disallow to hide a page from search results is one of the most common and costly SEO mistakes a new site owner can make. You are telling the bot not to look, but you are not telling it to forget what it already knows.”

Step-by-Step: How to Correctly Remove a Page from Google

- Go to your robots.txt file. Remove any Disallow rules for the page you want to remove. You must allow Google to crawl it.

- Go to the page itself (e.g., in your WordPress editor) and add the noindex meta tag to its <head>.

- Submit the page to Google for indexing using the Google Search Console “URL Inspection” tool.

- Wait. Google will re-crawl the page, see the noindex tag, and drop it from the index.

- Only after the page is gone from search results can you (optionally) add the Disallow rule back. This will prevent Google from wasting time re-crawling it in the future, which saves crawl budget.

What You Should Never Block with Disallow

A common mistake on new sites is being too aggressive with Disallow rules. This can be just as bad as blocking the whole site.

Critical CSS and JavaScript (JS) Files

In the old days of the web, Google only read HTML. Today, Google’s crawlers render your page just like a web browser does. This is the foundation of “mobile-first indexing.”

Google needs to see your page exactly as a user sees it. To do this, it must be able to download and read all your CSS and JavaScript files.

If you block your /themes/ or /js/ folders, Google will fail to render the page. It will see a broken, unstyled mess of plain HTML. It will conclude your page has a terrible user experience and your rankings will be decimated.

Rule of thumb: If a file is needed to make your page look correct or function properly, you must NOT Disallow it.

Real-World Use Cases: When Should You Use Disallow?

So if Disallow isn’t for hiding pages, what is it for?

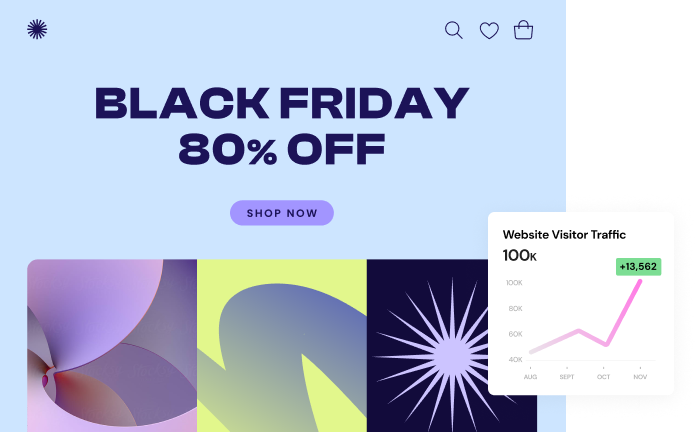

It’s for crawl budget optimization and blocking non-public sections. You are telling Google, “Don’t waste your limited time and resources crawling these unimportant pages. Please focus on my real content.”

1. Staging and Development Sites

When you are building a new site or testing a redesign, you often do it on a “staging” sub-domain (like staging.your-domain.com). You do not want Google to find and index this half-finished site.

- Best Method: Password protect the entire staging site (using HTTP authentication). This stops bots and humans.

- Good Method: Use a robots.txt file on the staging site’s root with User-agent: * and Disallow: /.

- Hosting Integration: Many modern managed WordPress hosting platforms provide “staging site” features. When you create a staging site, these hosts often automatically add the Disallow: / rule or password protection for you, so you can build and test with peace of mind.

Here is a great overview of how a high-quality hosting environment works: https://www.youtube.com/watch?v=gvuy5vSKJMg

2. WordPress Admin and Core Files

As mentioned, WordPress has a great default robots.txt. You absolutely want to block your /wp-admin/ folder. There is no reason for Google to crawl your site’s backend login page or admin panels.

- Disallow: /wp-admin/

- Disallow: /wp-login.php

3. Internal Search Results Pages

When a user searches on your site, WordPress might create a URL like your-domain.com/?s=my-search.

These search result pages are “thin content,” often create duplicate content issues, and provide no unique value to search engines. They are a classic waste of crawl budget.

- Disallow: /*?s=

- Disallow: /search/ (if your site uses a path like /search/my-search)

4. eCommerce Pages (Cart, Checkout, My Account)

These pages are unique to each user and have zero SEO value. You do not want your “Cart” page showing up in Google.

- Disallow: /cart/

- Disallow: /checkout/

- Disallow: /my-account/

This remains true even when using advanced tools to style them. For example, if you use the Elementor WooCommerce Builder to create a beautiful, custom checkout page, you still must block that URL. The page is for transacting, not for ranking.

5. “Thank You” Pages and Lead Gen Funnels

After a user signs up for your newsletter, you might send them to your-domain.com/thank-you. You don’t want people finding this page in Google and skipping your sign-up form.

- Good Method: Disallow: /thank-you/

- Best Method: Add a noindex tag to the /thank-you/ page. This is more reliable, just in case someone links to your thank you page.

6. PDFs and Other Internal Files

If you have internal company reports, price lists, or documents that aren’t secret but are not intended for the public, Disallow is a good way to keep them out of search.

- Disallow: /internal-reports/*.pdf$

How to Create and Edit Your Robots.txt File

There are three primary ways to manage your robots.txt file.

Method 1: The Old-School Way (Plain Text File)

- Open a plain text editor (like Notepad on Windows or TextEdit on Mac).

- Type your rules.

- Save the file exactly as robots.txt.

- Using an FTP client (like FileZilla) or your hosting cPanel’s File Manager, upload this file to the root directory of your site (often called public_html or www).

Method 2: Editing in WordPress (The Easy Way)

This is the recommended method for 99% of WordPress users. SEO plugins like Yoast or Rank Math create a “virtual” robots.txt file for you and give you a simple editor.

- In your WordPress dashboard, go to your SEO plugin’s settings.

- Look for a tool named “File Editor” or “Robots.txt Editor.”

- You will see a text box where you can type your rules directly.

- Click “Save,” and the plugin handles the rest.

Method 3: The Built-in WordPress Setting

WordPress has a single checkbox that controls the most powerful Disallow rule.

- In your WordPress dashboard, go to Settings > Reading.

- Find the checkbox labeled “Discourage search engines from indexing this site.”

- If you check this box, WordPress will add User-agent: * and Disallow: / to your site. This blocks everything.

- This is fantastic for a site in development, but you must remember to uncheck this box the moment you go live.

For a great beginner’s guide on getting a WordPress site set up (and remembering settings like this), this video is a helpful starting point: https://www.youtube.com/watch?v=cmx5_uThbrM

How to Test Your Robots.txt Directives

Never “set it and forget it.” A typo can be devastating. Always test your rules.

The Best Tool: Google Search Console

Google Search Console is a free service that is essential for any website owner. It provides two ways to test your rules.

1. The Robots.txt Tester This is an older but still functional tool within Search Console.

- Open the tester for your property.

- It will show you the robots.txt file Google has cached.

- You can paste in new code to test it against Google’s rules.

- At the bottom, you can enter any URL path on your site (e.g., /admin/login.php) and it will tell you if the current rules “Block” or “Allow” it.

2. The URL Inspection Tool (Modern Method) This is the best way to test a live URL.

- In Search Console, click “URL Inspection” at the top.

- Enter a full URL from your site that you think you are blocking (e.g., https://your-domain.com/my-account/).

- After it fetches the data, look at the “Page indexing” report.

- It will tell you “Crawl allowed? No: Blocked by robots.txt.”

- This is definitive, real-time proof that your rule is working as Google sees it.

Other Important Directives in Robots.txt

The Disallow directive is the most common, but it’s not alone.

Sitemap:

This directive is not a crawl rule but a pointer. It tells search engines where to find your sitemap, which is the “public map” of all the pages you do want them to crawl.

- Sitemap: https://your-domain.com/sitemap_index.xml

It’s a best practice to add this to the very top or bottom of your robots.txt file. You can have multiple Sitemap: entries.

Crawl-delay:

This is a non-standard directive that asks bots to wait a certain number of seconds between crawl requests.

- Crawl-delay: 10

Do not use this for Googlebot. Google manages its own crawl rate based on your server’s health. Adding this can throttle Googlebot unnecessarily. This directive is primarily for other bots (like Bing, Yandex) and is only useful if you find that a specific bot is overwhelming your server.

Conclusion: Disallow is a Scalpel, Not a Hammer

Your robots.txt file is a small but powerful piece of your site’s technical foundation. The Disallow directive is its sharpest tool, but it’s a scalpel, not a hammer.

It is not the tool for hiding pages from search results. That job belongs to the noindex tag.

Instead, Disallow is a strategic tool for professionals. You use it to guide search engines, optimize your crawl budget, and protect the non-public parts of your website. When you build a professional site, perhaps using a flexible platform like Elementor Pro to get the design just right, you must also understand the technical files that make it run. Mastering robots.txt is a fundamental step toward running a truly professional and successful website.

Frequently Asked Questions (FAQ) About Robots.txt Disallow

1. Does Disallow remove my page from Google? No. Disallow only tells Google not to crawl the page. If the page is already in Google’s index, it will likely stay there, showing the “A description for this result is not available” message. To remove a page, you must use the noindex meta tag.

2. How long does it take for Google to see my robots.txt changes? Google caches your robots.txt file for up to 24 hours. A change you make right now might not be seen or followed by Googlebot until tomorrow. If you need a faster change, you can request an update in Google Search Console’s Robots.txt Tester.

3. Can I block multiple bots at once? No, you must create a separate User-agent group for each bot you want to give specific rules to. The only exception is User-agent: *, which applies to all bots not specifically named.

4. What’s the difference between Disallow: /admin and Disallow: /admin/? Disallow: /admin (no trailing slash) will block /admin and /administrator and /admin-page.html. It is very broad. Disallow: /admin/ (with a trailing slash) is safer, as it only blocks files within the /admin/ folder.

5. Will Disallow block backlinks from “working”? This is a complex SEO topic. Disallow stops Googlebot from crawling the page. This prevents Google from seeing the page’s content, and it may also prevent Google from passing PageRank from that page to other pages it links to. However, Google may still count external links pointing to the disallowed page, even if it can’t crawl it.

6. How do I block all bots except Google? This is a common request. You would set it up like this:

User-agent: Googlebot

Disallow:

User-agent: *

Disallow: /

This tells Googlebot “nothing is disallowed for you,” and then tells all other bots “everything is disallowed for you.”

7. Is Disallow case-sensitive? Yes. Disallow: /My-File.pdf will not block /my-file.pdf. If you have case-variant URLs, you must block all variations.

8. What happens if I accidentally block my CSS files? Your rankings will drop, potentially very quickly. Googlebot will fail to render your page, see it as “broken” or “thin,” and demote it for having a poor user experience. You can check this using the “URL Inspection” tool in Search Console, which will show you a screenshot of how Googlebot sees your page.

9. Is Disallow a good way to block my staging site? It’s a good way, but the best way is to password-protect your staging site. This blocks 100% of all bots and unauthorized humans, whereas Disallow only blocks polite bots.

10. Where do I put the Sitemap: directive? You can put Sitemap: [your-sitemap-url] on any line in your robots.txt file, as long as it’s not in the middle of a User-agent group’s rules. Most people place all Sitemap: directives at the very top or very bottom of the file for organization.

Looking for fresh content?

By entering your email, you agree to receive Elementor emails, including marketing emails,

and agree to our Terms & Conditions and Privacy Policy.